The real challenge in AI adoption in Localization isn’t the tech, it’s the pressure to pretend it’s already working

Three years ago, during Christmas 2022, I was standing in a long line at a crowded bakery in Spain, waiting to pick up our roscón de Reyes for Día de los Reyes Magos. It was one of those chaotic moments. People everywhere, kids running around, orders being shouted over the counter.

While I waited my turn, I opened Evernote and scrolled through my “review later” folder. That’s where I save things I don’t have time to read during the day, but hope to revisit on a quieter evening or weekend. And there, something caught my eye. It was an article about a new thing called ChatGPT.

Out of curiosity, I installed the app right there in the bakery in my mobile phone. Within minutes, I was hooked. “Wow, this is cool,” I thought. “I could really use this.”

Back then, I didn’t see it as a “machine translation” tool. Not even close. I hadn’t considered AI as a localization enabler. For me, it felt more like a supercharged version of Wikipedia. Something to quickly look up ideas, get explanations, and maybe support a bit of research here and there. I had no idea how much it would grow or how fast it would change the conversations around localization.

Since that moment, waiting for my roscón, a lot has happened.

After three years of watching, reading, testing, and, yes, sometimes fighting with AI tools in localization, I believe I’ve witnessed the full cycle. The hype, the pressure, the pilot projects, the excitement, the denial, the disappointment….

These three years have been intense. A real rollercoaster when it comes to AI.

At first, everyone was excited. AI was the hot topic. Then came the pressure.

“How fast can we implement this?”

“Can we automate the entire flow?”

That excitement quickly turned into urgency, and then into confusion in our localization world. And then, the question we now hear almost everywhere. A question that, back in that bakery queue, I couldn’t have imagined:

“Why do we still need human review to localize content?”

I’ve seen a lot of hype with AI. I’ve sat in meetings where AI was treated like a magic button. I’ve tested tools that promised automation but created even more clean-up work. I’ve spoken to teams who quietly admitted their AI pilots didn’t really work, but didn’t feel safe saying it publicly.

So now, a few years in, I think it’s time for a reality check. Not from a technical point of view. Many people are already doing that.

But from inside the localization trenches. From someone who’s been living this shift day to day.

Because the biggest challenge in AI adoption, as I see it, isn’t the tech; it’s the pressure to pretend it’s already working.

Click HERE to download the graphic

Here are the five most common hurdles I see across companies, tools, and teams. And what we can actually do about them.

1. Pressure from the Top

“Why aren’t we automating more?”

From the outside, it looks like everyone is implementing AI. It’s in investor calls, roadmaps, and quarterly plans. So naturally, leadership starts asking questions. And they’re fair questions:

Why is this still manual?

Why aren’t we moving faster?

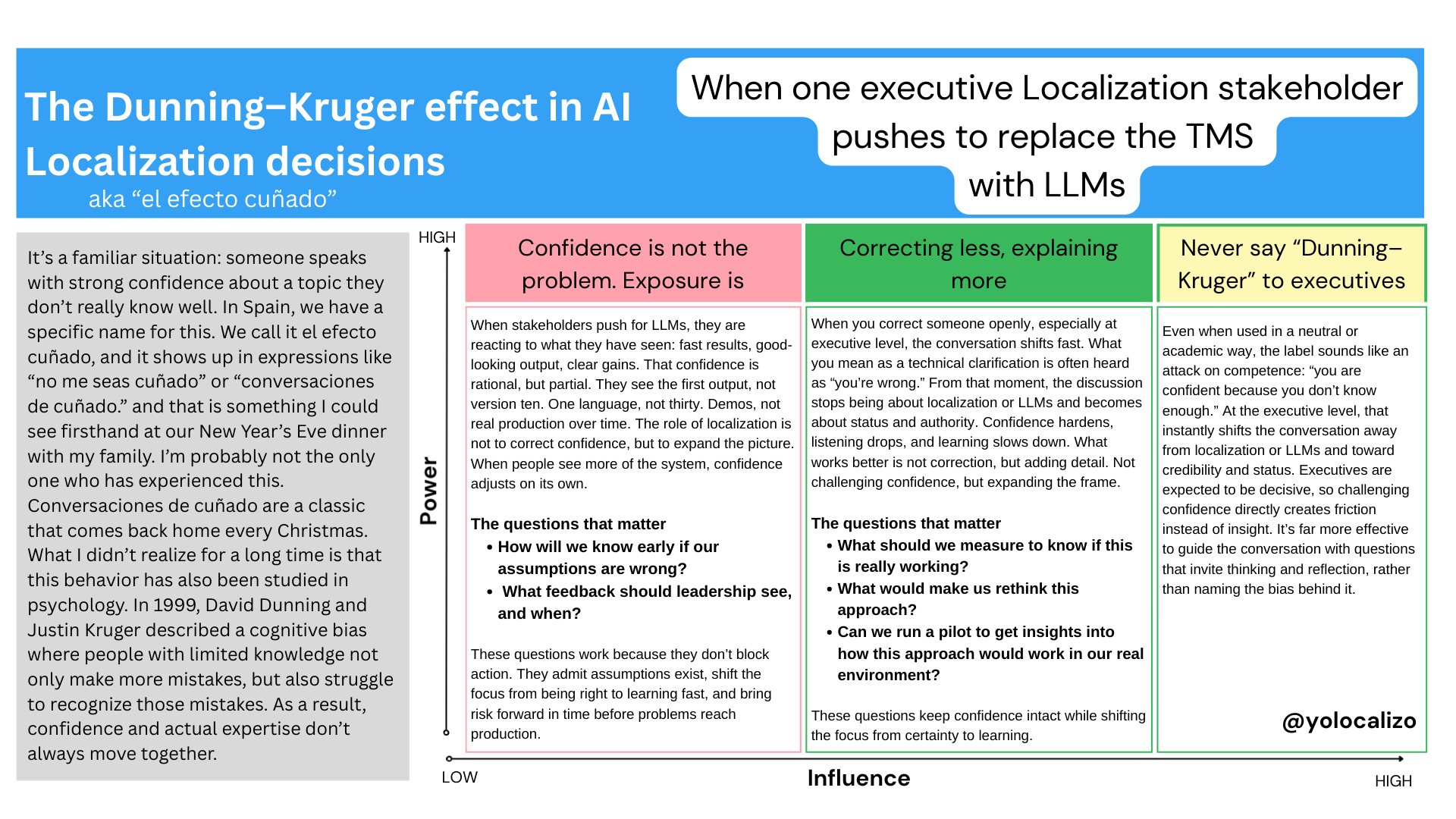

But the reality behind the scenes is more complex. Many leaders fail to recognize the edge cases, cross-functional blockers, or quality trade-offs inherent in localization workflows. AI is often seen as a silver bullet. But without operational understanding, that view leads to unrealistic timelines and risky decisions.

What helps to balance this leadership pressure

Share real examples. Show where AI works and where it still struggles.

Propose low-risk pilots before rolling out to production

Frame AI adoption as a journey, not a checkbox.

2. Mismatch of Expectations

Automation is not magic

One of the biggest misconceptions is that AI will replace people. That it’s “push a button, done.” But real workflows involve context, tone, and brand alignment. Things AI models don’t fully understand (at least yet!)

I’ve seen teams try to fully automate too early. Then they realize they still need human review, prompt tuning, QA, and post-editing. Suddenly, the time saved disappears. And worse, quality drops.

What helps to align expectations …

Be clear about where AI supports, and where humans lead.

Keep human-in-the-loop review steps, especially in sensitive or public user facing content.

Normalize learning cycles. The first version is rarely the final one.

3. Tech Integration Gaps

It doesn’t just plug in

It’s one thing to test an AI tool in isolation. It’s a different story to make it work with your CMS, TMS, content design systems, or UX tools.

Most of these tools weren’t built with localization in mind. Retrofitting them adds unexpected complexity and overhead.

I’ve seen promising pilots stall simply because integration wasn’t part of the plan early on. The tool might be great, but if it doesn’t fit your systems, it can cost you time, budget, and momentum.

What helps to close the gap …

Involve engineers early

Prioritize tools that play well with your systems. Look for APIs that can connect to your localization ecosystems

When meeting with tech vendors, ask for real demos of how integration works. Not just slides. As we say, PowerPoint stands anything.

4. Hallucinations and Cost Risks

When AI gets it wrong, your brand pays

Yes, hallucinations still happen. Even with the latest models. And while the progress over the last three years is incredible, the problem remains. AI can sound confident even when it’s completely wrong.

I’ve seen it giving wrong product names, misuse brand tone, and generate translations that reverse the meaning entirely.

This is more than a bug, as it’s really risking branding perception. If we don’t catch them, clients might notice. And when AI introduces errors at scale, fixing them takes time, money, and trust rebuilding.

What helps to push back these hallucinations is

Start small and low-profile. Use AI for internal docs, FAQs, or support content.

Always, always, always keep humans involved in customer-facing materials.

Track AI errors. They are useful training data for both your team and the tools.

5. Data Quality Needs

Garbage in, garbage out

This one is not new. But it’s more critical than ever.

AI mirrors what we feed it. If our source content is vague, inconsistent, or not written with localization in mind, the output will reflect that. Clean source content has always been important. Now, it’s non-negotiable.

What helps to improve quality is …

Run regular audits of your source content.

Build style guides and reference examples.

Collaborate with other teams like product, UX, and content design to improve inputs before they even reach localization.

Final thoughts

There’s no doubt AI is transforming how we work. But we can’t skip the complex parts and we shouldn’t pretend we’re further along than we are. We should be honest about what’s working and what isn’t.

We should run pilots with purpose. And if you’re feeling the pressure, you’re not alone. If you’re still trying to figure out how to make it work without breaking your workflows or burning out your team you’re not behind. You’re just being realistic.

AI will be a powerful tool for localization. But only if we approach it realistically. Start sharing small wins, real concerns, and grounded use cases. That’s how we move forward without pretending everything’s perfect.

And if you’re feeling overwhelmed or unsure how to make AI work without breaking your workflows or burning out your team, you’re not behind. You’re just being realistic.

AI can be a powerful tool for localization if we approach it with clarity and preparation. Start sharing the small wins, the blockers, the lessons. That’s how we build better workflows and better conversations.

@yolocalizo

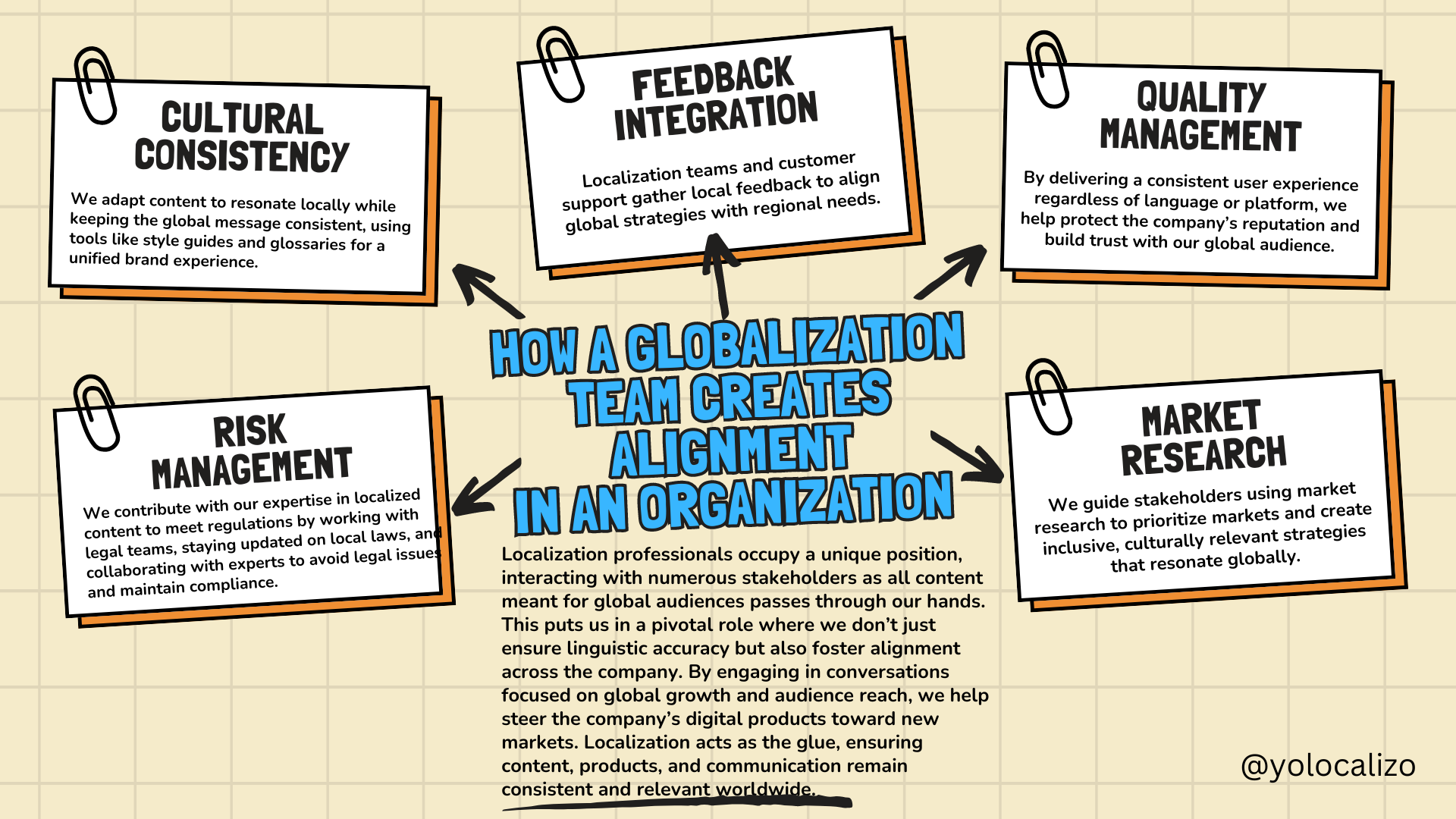

Localization professionals often focus on translation quality and best practices, but decision-makers care about customer impact and revenue. If we frame localization as a cost, it risks being deprioritized. Instead, we must highlight its value driving engagement, trust, and business growth.