Maybe it’s time for cultural LLMs, understanding the cultural impacts of AI

Seven years ago, together with my “twin” Maria, we stood on the Warsaw LocWorld stage and gave a talk titled:

“How to Increase Your Cultural Intelligence (CQ) in the Localization Industry.”

At the time, the focus was on people. I shared stories about leading multicultural teams in Finland, Sweden, China, and the U.S. and the unexpected challenges that came not from language but from deep cultural differences in how we give feedback, make decisions, and build trust. Maria did the same. She’s been leading multicultural teams for years, and she carries both Polish and Canadian perspectives in her DNA.

Back then, the big question was:

How can humans work better across cultures?

Today, that question still matters. But now there’s a twist:

How can machines work better across cultures?

And maybe more importantly:

How can humans lead and AI power in this new global era together?

Because even the most intelligent AI doesn’t know what not to say in Japan, how to gain trust in Brazil, or why an “efficient” apology might feel disrespectful in the UAE.

It’s easy to think this is all about data. But we’re dealing with how people connect, feel respected, and build trust. That’s always been a human thing.

Multilingual ≠ Multicultural

In 2025, LLMs are everywhere.

They write emails, summarize meetings, run customer service, and translate across dozens of languages.

However, we often fail to realize that they might speak the language but don’t understand the culture.

Most AI systems are trained on massive datasets dominated by English, Western, and individualistic worldviews. They respond in ways that may be technically correct but emotionally tone-deaf.

The company launched an AI assistant that replaced 700 support agents, handled millions of chats in 35 languages, and reduced response times by 82%.

Technically, it was a huge success! With all those savings and efficiencies, it looked perfect (in theory and depending on whom, of course), but just over a year later, Klarna began rehiring humans.

Customer satisfaction had dropped.

The system didn’t meet people’s expectations.

Why?

Because Klarna optimized for languages, but overlooked cultures.

It treated human communication as a translation problem, not a relationship problem.

What I learned about CQ back then

Our LocWorld presentation talked about cultural intelligence (CQ), which is the capability to work effectively across cultures. We introduced Erin Meyer’s Culture Map model, which outlines 8 dimensions of cultural difference:

Communication (high vs. low context)

Feedback (direct vs. indirect)

Leadership (egalitarian vs. hierarchical)

Decision-making (top-down vs. consensual)

Trust-building (task-based vs. relationship-based)

...and more.

Understanding these differences helps humans lead better.

But now we’re asking AI to play in the same space, without any of this cultural context.

So the question becomes:

Are we building AI that can operate globally, or just translate globally?

Maybe It’s Time for Cultural LLMs

We’ve spent the last 2–3 years racing to scale AI globally. We read about training massive models to speak dozens of languages and serve millions of users. But somewhere along the way, I feel that we’ve treated culture as a secondary concern or maybe not even a concern at all, just something seen as “nice to have.”

“Translation is solved. What’s next?” I’ve heard that a lot over the past year.

Even assuming that claim was true (spoiler alert: it’s not), cultural accuracy is nowhere near solved. LLMs, from a cultural perspective, aren’t being trained with this as a priority.

What if we took a different path?

What if we embraced cultural diversity as a design principle instead of aiming for a single, “universal” AI model?

What if we had cultural LLMs, models fine-tuned not only by language or region but also by how people expect to be treated?

So let’s make it concrete. What would it mean to actually design AI with culture in mind?

Imagine:

A Japanese model that understands meiwaku and values humble, layered communication.

A UAE model that avoids transactional-sounding apologies and instead prioritizes dignity and respect.

A Latin American model that builds trust through relational warmth and expressiveness.

Getting the language right is only the beginning. What truly matters is making the interaction feel human and local. They need to understand our context, not just speak our language.

How Could We Build Cultural LLMs?

Ideas from a Localization Professional’s Lens

Click HERE to download the infographic

As localization professionals, we’ve always been cultural interpreters. Now, we have an opportunity (and a responsibility) to lead AI in the same way.

Here’s where we could start:

1. Curate culturally rich, intentional datasets

Go beyond documentation and websites. Build corpora from sources reflecting how people speak, joke, complain, or build trust.

What to include:

Regional media (TV, radio, podcasts, YouTube interviews)

Annotated customer service conversations (with permission and anonymization)

Local storytelling formats, idioms, humor, and disagreement styles

Human-reviewed tone-of-voice guides used in marketing, UX writing, and localization

Where and how to get it:

Partner with local customer experience/support teams to gather anonymized real-world conversations ( chat logs, email threads, or transcripts )that reflect how users express needs, frustration, or appreciation in their own words.

Collaborate with in-market agencies, voice and tone reviewers, or content strategists

Use open-source text datasets (OSCAR , CC100 …), which contain web content in many languages. Filter by language and country domains to isolate culturally relevant data for each market

Scrape public domain cultural content while respecting privacy and consent

Commission cultural content audits to identify emotional and linguistic patterns per region

The goal is to give the model the kinds of examples that reflect how people build relationships through tone, emotion, and cultural nuance

2. Bring cultural experts into the AI workflow

AI shouldn’t be left to engineers alone. Culture and language teams already do this work; now, they should have a seat at the table.

Who to involve:

Local market linguists

Intercultural consultants and sociolinguists

DEI practitioners and community researchers

Brand voice experts familiar with tone and register across cultures

Where to plug them in:

During prompt design for tone calibration

In reviewing model responses for cultural alignment

While defining acceptable tone, politeness levels, or interaction style per market

In identifying potentially offensive or confusing patterns

Right now, many localization professionals are being asked to fix AI-generated content after the fact to polish tone, fix style, or rewrite what doesn’t quite land. But as we’ve said in this industry for years, quality starts at the source. If the input is wrong, no amount of post-editing can make it truly right

3. Build modular cultural behavior layers

Building a different AI model for every culture can feel overwhelming, even paralyzing. But we don’t have to start there. A more realistic step is to create modular “cultural behavior packs”, flexible components that help the model shift how it communicates depending on the audience.

Here’s how to do it and what this could include:

Rules for formal vs informal tone

Preferred apology and politeness strategies

Country-specific conflict-resolution patterns

Expectations around hierarchy or consensus

Tone filters (e.g. warmth vs. efficiency) depending on region

Once these cultural packs are defined, the next step is figuring out how to actually apply them in our AI workflows. Here are a few ways they can be integrated into existing systems:

· As soft prompt templates or tone layers for GenAI outputs (Example: "You are responding as a customer support agent in Japan. Use formal, humble language and prioritize acknowledgment of inconvenience.")

· Through adapters or guardrails during fine-tuning

Adapters are lightweight tuning layers that adjust the AI's behavior for specific markets or cultural styles. Guardrails act as built-in constraints, helping prevent things like tone mismatches, overly blunt responses, or culturally off-key suggestions. Think of it as adding a cultural safety net. For example, a guardrail for the UAE might instruct the model: “Avoid offering a discount right away. Start with empathy and reassurance instead.”

As part of user persona detection logic in AI agents

AI systems can be built to adjust their tone or interaction style based on the user’s profile, preferences, or location.

For example, if the AI detects a user in Germany, it could respond more directly and efficiently. If it detects a user in Korea, it might respond more deferentially and context-sensitively.

4. Redefine success in AI

A model that sounds fluent doesn’t always feel right to the person reading or hearing it. In localization, we’ve known this for a long time, quality has often been subjective. Hello, this text sounds weird, never-ending conversation with internal stakeholders?? Anyone? What’s considered “natural” or “respectful” in one market might feel robotic or even inappropriate in another.

We’re likely to face the same reality with AI-generated content.

Success can’t be measured by fluency or speed alone. We need to ask:

· Does this tone feel trustworthy in this culture?

· Does it respect the emotional expectations of the audience?

· Would a local team member say it that way?

And you know what? AI can not answer these questions on its own! They require human judgment and that’s where our experience in localization can guide the way forward.

What to look for:

Does the AI sound “human” to a native speaker in that region?

Does it come across as respectful, warm, or trustworthy, not just correct?

Are users more likely to accept or reject its suggestions based on tone?

How to measure it:

In localization, we've spent years being asked to prove our value by showing how language adaptation impacts user satisfaction, conversion, or retention. We've built dashboards, shared A/B results, and developed frameworks to justify investment.

Measuring cultural alignment in AI isn't so different.

We’re applying the same mindset, just to a new layer of interaction.

Here are a few ways we can track impact:

· Market-specific UX testing and user interviews

Observe how real users react to tone, structure, or formality in their language and region.

· A/B testing for user satisfaction, task completion, and engagement

Compare culturally tuned versions of prompts or replies against default ones and watch what performs better.

· In-market cultural reviewers using qualitative rubrics

Lean on the same kinds of expert review that localization QA has used for years, but focused on emotional fit and tone.

· Feedback from customer support or social listening teams

What are people saying about the AI’s tone? Is it helping or harming the brand experience? Localization teams are used to tracking this kind of signal.

The tools are familiar, we’re just using them to evaluate cultural resonance in AI instead of translation output.

5. Let localization professionals lead the way

Localization teams have been bridging cultures and shaping user experiences for decades. We’re the ones who’ve figured out how to make global products feel local. We have translated content, adapting tone, anticipating cultural nuances, and making people feel like the product was made for them. So, as GenAI continues to expand into more user-facing roles, we’re not starting from scratch. In fact, we’re already doing much of what’s needed, and we’re ready to lead.

What we can lead:

Co-creating prompt libraries and tone guides with product and AI teams

Owning cultural QA as part of GenAI review cycles

Advising on voice and tone governance for AI products

Training internal teams on cultural behavior frameworks (on top of the translation rules)

Where to start:

Join LLM implementation task forces

Connect with product owners and AI researchers as early as possible

Position localization not as a service but as a strategic input for culturally intelligent systems

We’ve been building bridges between language and people for years. Now it’s time to build them into AI itself.

Conclusions

Cultural intelligence won’t emerge from algorithms.

AI doesn’t become culturally aware just because it’s multilingual.

We need more than engineering training LLMs; we need cultural orchestrators. We need the data, values, and behavioral signals that define how AI behaves in each culture. And localization professionals are in an excellent position to contribute to that, because we’ve been doing it for years, and it would be a shame not to build upon that experience and let those LLMs behave globally based on the current English, Western, and individualistic worldviews we are getting.

Seven years ago, I said CQ was the future of leadership (btw , here’s the link to the original post!

Today, I believe it’s also the future of AI.

Let’s start the conversation:

What would it take to build Cultural LLMs?

How can we embed CQ into AI product development?

And how do we ensure humans remain the ethical and cultural compass in this new era of automation?

People don’t just want accurate answers.

They want to feel understood.

@yolocalizo

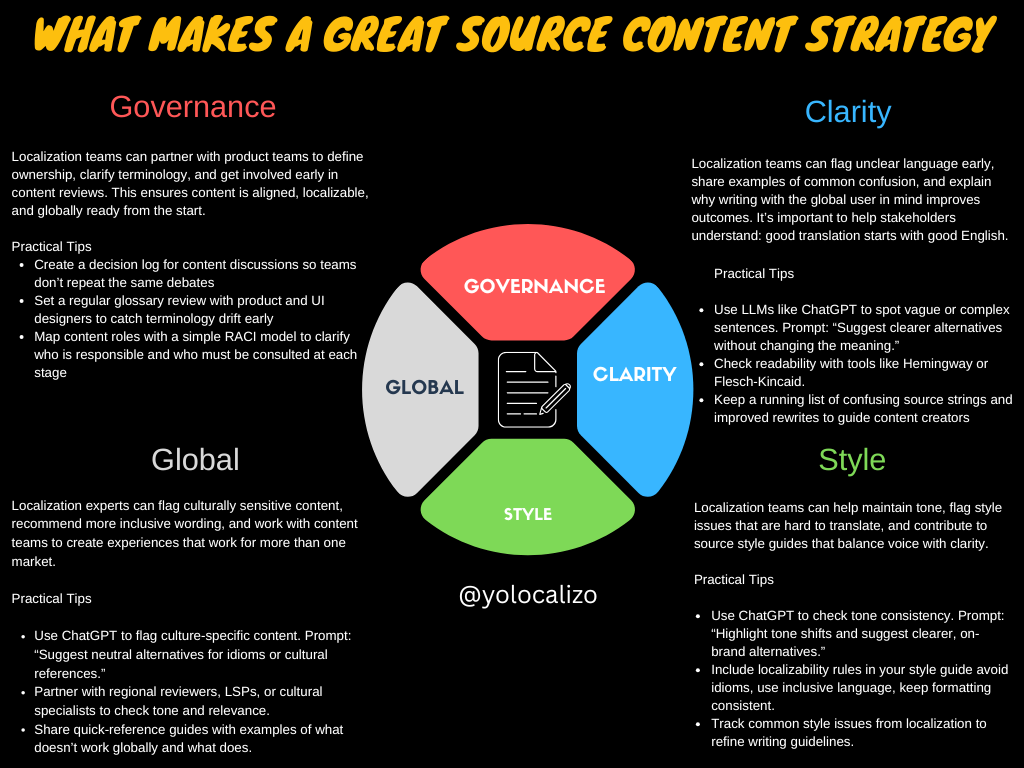

Localization teams collect tons of data, edit distance, LQA errors, fuzzy matches, deadlines met, but too often, that data doesn’t speak the language of business. This post explains how to change that. By linking each localization metric to one of the classic project management levers, quality, time, or cost, and showing its real-world impact, we can turn linguistic KPIs into insights that leaders understand, value, and act on.