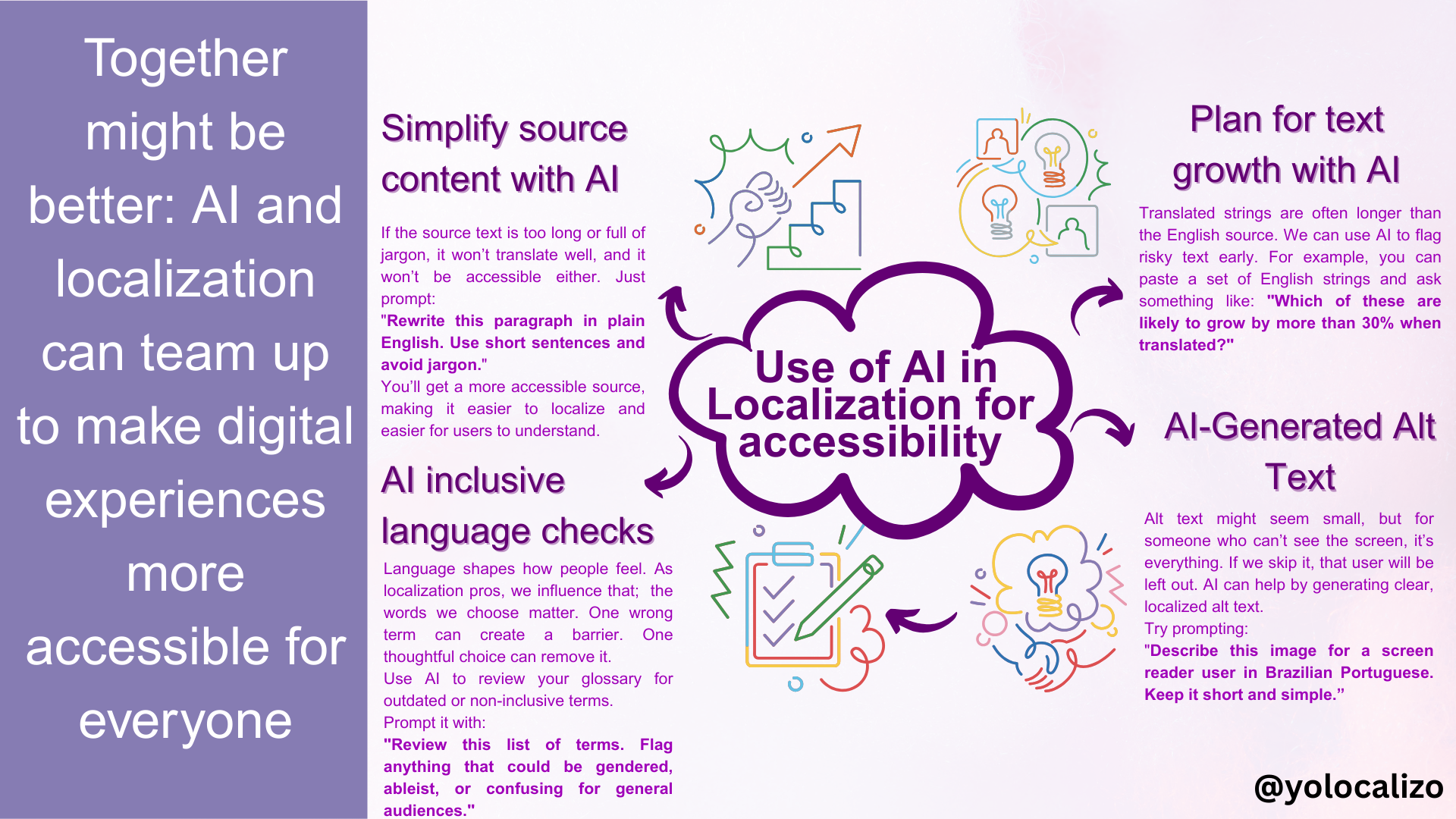

The HOW on how to use AI in Localization for accessibility

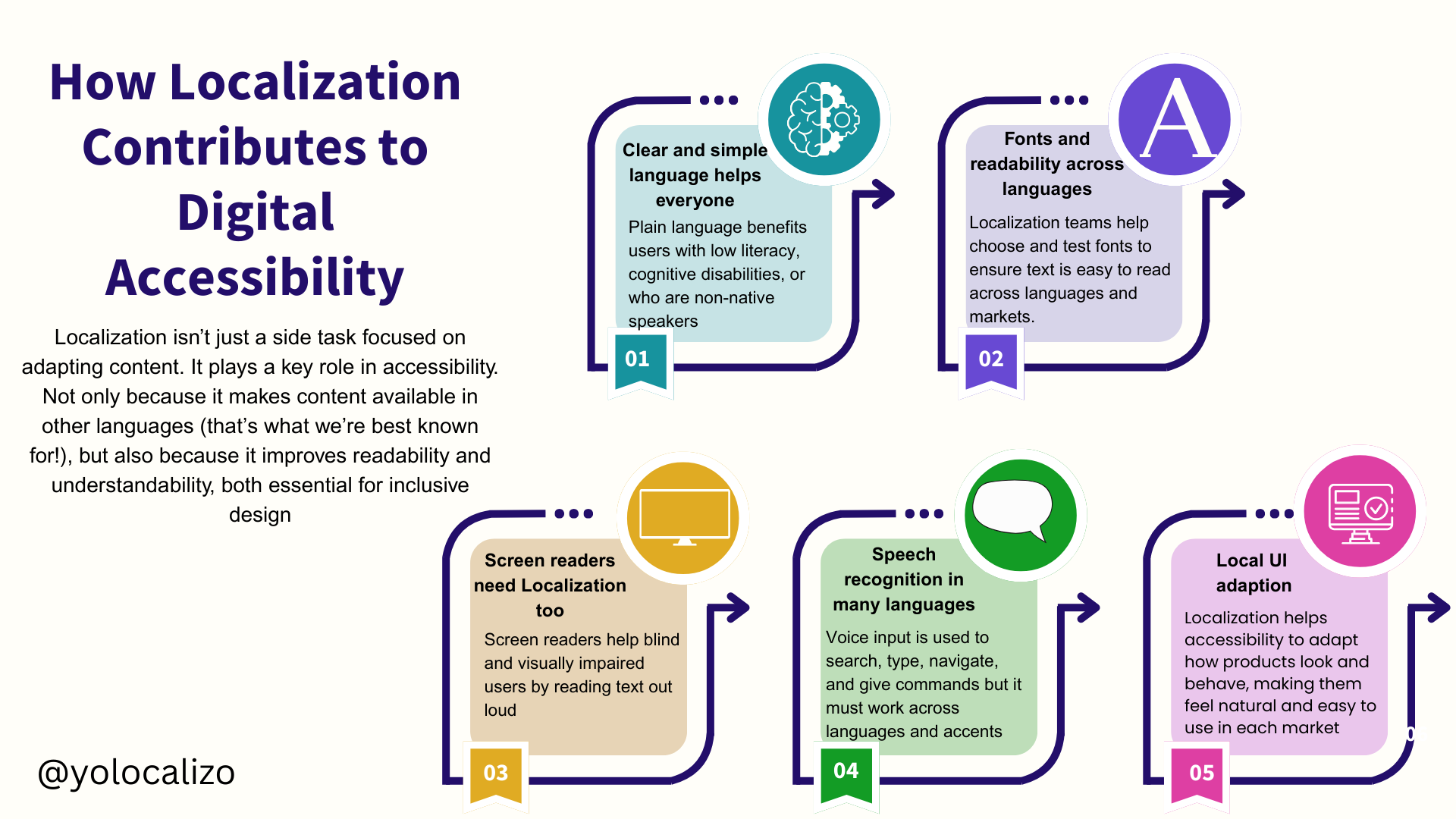

In my previous post, I discussed how localization contributes to accessibility by translating content and helping make products easier to read, hear, and use. That was the "why."

This post is about the "how."

Now, I know what you might be thinking. AI is often seen as the enemy, as the devil. I've lost count of how many doomsday posts I've read over the past couple of years, saying translators are done or that AI will wipe out the entire localization industry. And it's not just online. I've heard the same kind of talk at events I attended in the last couple of years, like LocWorld and GALA, or even during informal chats at LocLeaders or Women in Localization meetups.

But today, I want to extend a hand to AI (I hope I won't regret it!). What I really want to explore is this idea that keeps popping into my head: that together is better.

AI doesn't have to replace us. It can help us, mainly when it comes to something as important as accessibility. It can speed things up, flag problems early, and help us scale the work we're already doing without losing the human touch that makes localization great!

Start with the real problems

Let's start with what really happens when we work on localization projects.

Sometimes the English source content is too long or too complex. It feels like it was written for a legal document, not for users. Honestly, sometimes when I get new content, it feels like if you're not a lawyer, you won't get it! And, of course, when we try to localize that kind of content, things can go wrong quickly. The translation often gets longer and doesn't fit on the screen. That breaks the layout and on mobile devices, it breaks things even more.

Sure, screens have come a long way since Steve Jobs introduced that legendary 3.5" iPhone back in his 2007 keynote. We're now close to 7-inch displays. But even with all that space, mobile UI still brings a real challenge. Text gets cut off. And when that happens, understanding what's on the screen becomes harder. For any user, that's already a problem. But when it comes to accessibility, that problem gets even bigger

I still remember when I was working as a studio manager at Enzyme Labs, which is now part of the Keywords family. We were doing LQA testing on a big AAA sports simulator, one of those games that fans wait all year for. The German version had such long text in many places in the gameplay that it completely broke the main menu. Buttons overlapped, labels were cut off, and players couldn't even read what they were selecting. This was a seious issue caused by a lack of planning for text expansion. And yes, we fixed it but it cost time(and many pizzas dinners) and energy that could've been avoided.

Sometimes, the translation fits, but it's still hard to understand. You read it once, then again, and it still doesn't feel right. If it's hard for us, it's even harder for someone with reading fatigue or cognitive challenges. I tend to think about that as not just a style issue; it's an accessibility issue.

These issues don't just slow down the team they directly affect users who need accessible content. When layouts break or text is too hard to follow, some users simply can't continue. For someone using a screen reader or facing cognitive load, that moment can be a blocker. AI gives us a chance to spot these risks earlier and fix them before they turn into barriers.

In the following paragraphs, I will show you 5 ways where AI can be our ally to make our content more accessible

Click HERE to download the infographic

1.- Use AI to simplify content before Localization

If your English source text is too long or full of jargon, it won't translate well (and it won't be accessible!) You can use tools like ChatGPT to rewrite content in simple language. Prompt it with something like:

"Rewrite this paragraph in plain English. Use short sentences and avoid jargon."

This is useful during source reviews or when you want to audit content before it reaches translators.

When content is overly complex, it complicates life for translators and creates additional challenges for users with accessibility difficulties. If someone has reading difficulties or cognitive load, a long and messy sentence can stop them right there. I still remember a time when one of our senior translators at the LSPs reached out and asked us, "What are we trying to say in a particular string of boarding tutorial? as it was very difficult to understand. If we need a second take, imagine what that means for someone using a screen reader.

2.- Predict UI risks with text expansion

Translated strings are often longer than the English source. This breaks so many layouts.

The interesting thing now is that we can use AI to flag risky text early. For example, you can paste a set of English strings and ask something like: "Which of these are likely to grow by more than 30% when translated?" It's often said that localized content tends to be around 30% longer than English. It's not a scientific rule, but it's widely accepted in our field.Think I'm exaggerating? Bear with me. How long is the button View in English? Now let's look at the Italian version, Visualizzazione. Oh well, I think I just convinced you 😊

Some LLMs can simulate how long a translation might be based on typical language patterns, so embrace AI (at least for this!) and let it help you to plan expansion in the UI

When layouts break, the ones who pay the price are the users who already have a harder time navigating the product. If a button label gets cut off or a menu overlaps, someone using assistive tech might not be able to move forward at all. What looks like a small UI issue to us can completely block their experience. That's why spotting these risks early matters. Yes, it saves QA time, but more importantly, it means people can actually use the software we are localizing.

3.-Alt Text suggestion for Localized images

If you're localizing visual content (screenshots, banners, or tutorials), don't forget the alt text! Screen reader users rely on it to understand what's on the screen.

AI can help generate localized alt text that's short, accurate, and relevant for each market. You can use prompts like:

"Describe this image for a screen reader user in Brazilian Portuguese. Keep it short and simple."

Alt text might feel like a small detail, but it's a big deal for someone who can't see the screen. If we skip it that user gets left out of the experience. And not just in one market but in all of them. However, when we get it right, we're making sure visual content works for everyone. Plus, it saves local teams time as no one wants to write descriptions for ten banners at the last minute! (I've been there!)

4- Glossary + Terminology checks for inclusive language

Language is powerful. The words we choose shape how people feel, how they see themselves, and how they experience the world around them. That's why we, as localization professionals, carry a responsibility. We, Localization professionals, influence perception. When we use language that excludes, even without meaning to, we build barriers. When we choose words that convey welcome and respect, we eliminate barriers. For me, that's what accessibility really is. It's all about removing barriers, and unfortunately, with words that are not appropriate sometimes, although unintentional, we are contributing to creating those barriers. But it does not have to be like that anymore; we can rely on our "AI friend." We can use AI to review our glossary and spot terms that may be outdated, non-inclusive, or unclear. You can prompt it with something like

"Review this list of terms. Flag anything that could be gendered, ableist, or confusing for general audiences."

You can also train your AI workflows to always suggest more neutral or inclusive alternatives when flagged terms appear.

Why this helps is simple: words carry weight. The way we phrase things can either bring people in or push them out. I remember some time ago doing a narrative culture audit check and we identified some in-game strings where the word "crazy" was used to describe a bonus level. It seemed playful until a reviewer mentioned how that might land for someone with a mental health condition. We swapped it for "wild." Same meaning, better impact.

Final Thoughts

We're already doing a lot of accessibility work through localization, you just may not call it that yet. Every time we choose simpler language, check UI layouts in other languages, or replace a term that might hurt someone, we'recontributing to a more accessible experience. We've been doing this for years.

Now that AI is becoming part of the discussion, it’s worth taking a moment to explore its potential and how it can positively impact our experiences. Many in our industry see it as a threat. I get it. I've seen the buzz and noise everywhere. But maybe it's time to give peace a chance just for a moment.

What if we stop seeing AI as the enemy and start seeing it as a partner? One that helps us move faster, catch more, and scale the kind of accessibility work we already care about. We bring the context, empathy, and judgment. AI brings the speed, pattern spotting, and scale. Together, we can create digital experiences that more people can use, enjoy, and feel included in.

Let's build from there.

@yolocalizo

AI will not eliminate (initially) localization roles, but it is reducing the time spent on certain tasks. What once took hours can now take minutes. That creates capacity.We can treat that time as a cost savings or reinvest it. If nothing meaningful replaces it, the value of the role will eventually be called into question.Jobs do not disappear because tasks are automated. They disappear when the value is not redefined.

So the real question is: what can you do now with the time AI gives you that wasn't possible before?